Overview

In this organization, there were approximately eight thousand IT staff supporting two thousand applications across twenty business-facing IT areas. The delivery model in use was predominantly waterfall, with some CI and TDD practices in advanced areas. Development methods varied across the enterprise, leading to inconsistency in quality and delivery challenges.

Due to these issues, the board considered outsourcing more work. However, a senior vice president who had seen promising results from Agile practices was given a year to run an experiment to demonstrate whether these practices could be established and scaled to drive in-sourcing within a centralized development organization.

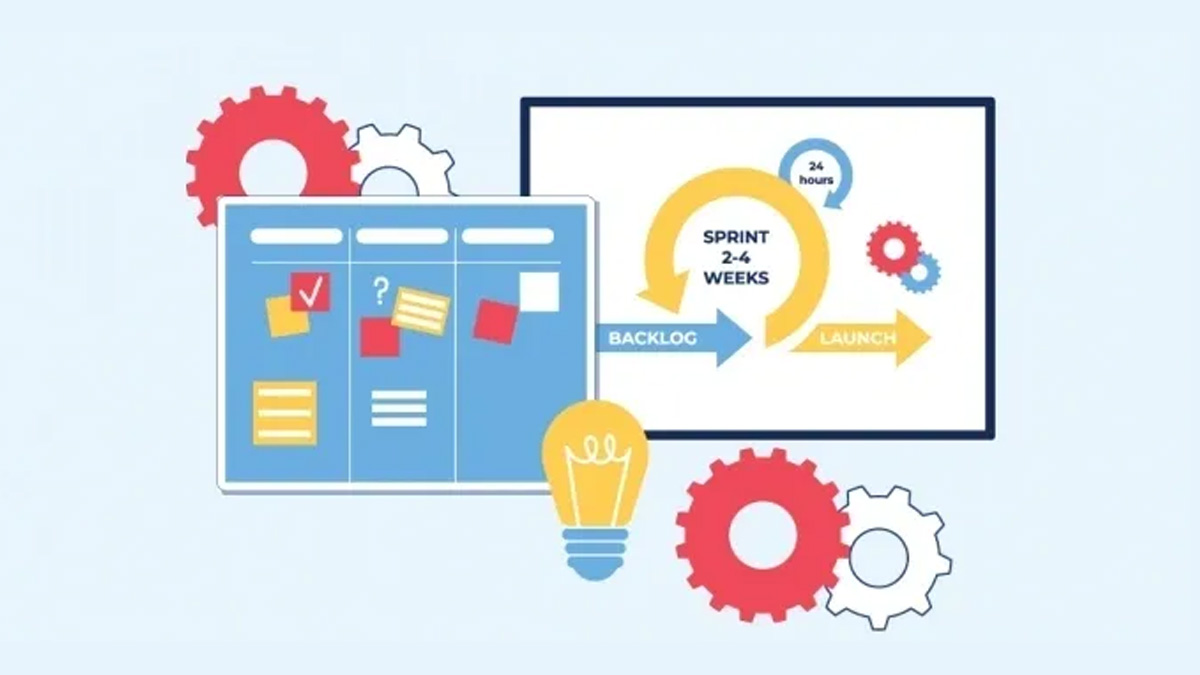

CI/TDD and Full Agile Stack Adoption

The journey began with the establishment of consistent Agile management practices applied across all technologies (e.g., standups, visual system management, iterations, show and tells, iteration planning, etc.). Engineering practices were adapted based on technology (version control for all source code, CI for Java/.Net, TDD, automated acceptance testing for all stories). Continuous peer-based learning and improvement processes were put in place to drive sustainability and scaling across the enterprise.

Within one year, the experiment proved successful, with significant improvements in quality (80% reduction in critical defects), productivity (82% of Agile teams ranked in the top quartile industry-wide), availability (70% increase), and on-time delivery (90%, up from 60%). As a result, the proportion of work done by Agile teams increased to over 70% of the total development work in IT (growing from three Agile teams to over two hundred teams) over five years.

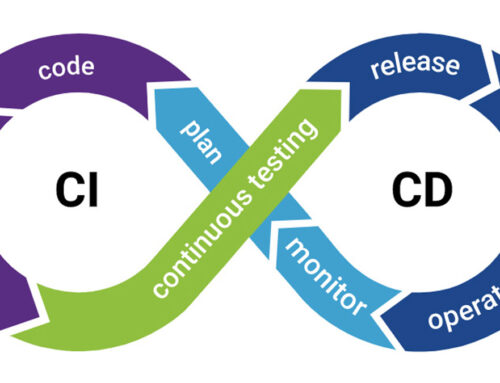

Adopting Lean Engineering Practices (DevOps)

While Agile implementation improved efficiency and speed during the development cycle, wait states persisted at the beginning and end of the cycle, creating a “water-Scrum-fall” effect. Sixty percent of the time spent on an initiative occurred before a story card entered an Agile team’s backlog. Once work left the final iteration, high ceremony, manual practices led to increased lead times for deployments due to disparate release and deployment practices, technologies, and dependencies resulting from large release batch sizes.

The focus then shifted to reducing variation in processes such as work intake, release, deployment, and change across the delivery pipeline. Lean practices (A3, value stream analysis) were applied to identify areas for improvement and reduce end-to-end lead time. The outcome was the construction of an integrated CD foundational pipeline to provide visibility and an accelerated delivery capability. Other initiatives to reduce wait times included infrastructure automation and increased test automation, incorporating service virtualization and test data management.

Small Batch Sizes, APIs, Microservices, Monitoring, and Metrics

Creating a CD foundation with automated infrastructure alone would not significantly reduce lead times without addressing dependencies that led to wait states for Agile teams. Therefore, the next step involved focusing on small batch sizes and modern web architecture practices (APIs, microservices). Additional methods to remove dependencies included implementing dark launching, feature flags, and canary testing, transitioning from scheduled releases to releasing based on readiness. Greater emphasis was placed on creating infrastructure as code, including containerization.

To improve lead times, end-to-end lead time had to be measured first. Having an integrated pipeline and workflow allowed the implementation of metrics to baseline lead time and measure improvements, identifying wait states within the delivery value stream.

As delivery accelerated, real-time monitoring and feedback became crucial for both operational performance and customer feedback to determine whether the business case “hypothesis” (including multivariate A/B testing) effectively drove more customer value.